hexaglycogen [they/them, he/him]

- 7 Posts

- 13 Comments

37·13 天前

37·13 天前the chatgpt dialect goes crazy

281·18 天前

281·18 天前cancer doesn’t fail on any moral level. It simply is.

a misfolded protein is the most efficient structure a protein can be in.

there are billions of dollars being churned around in running for congress, and they’re getting a salary of like, 200k a year. good luck running for congress without representing some very deep pockets.

I don’t judge cancer. It’s just efficient at growth, very efficient, and in a way that is unhelpful.

I don’t think the system is evil, i think it needs treatment.

21·30 天前

21·30 天前The attempt at censoring here is ineffective, one can still read the name under it due to the censor being a black highlighter instead of a true black marker.

9·6 个月前

9·6 个月前I very much feel the same way. it’s easier to give gifts than do chores.

I was thinking I had more to say but I don’t actually, I think it really just comes down to that for me.

45·6 个月前

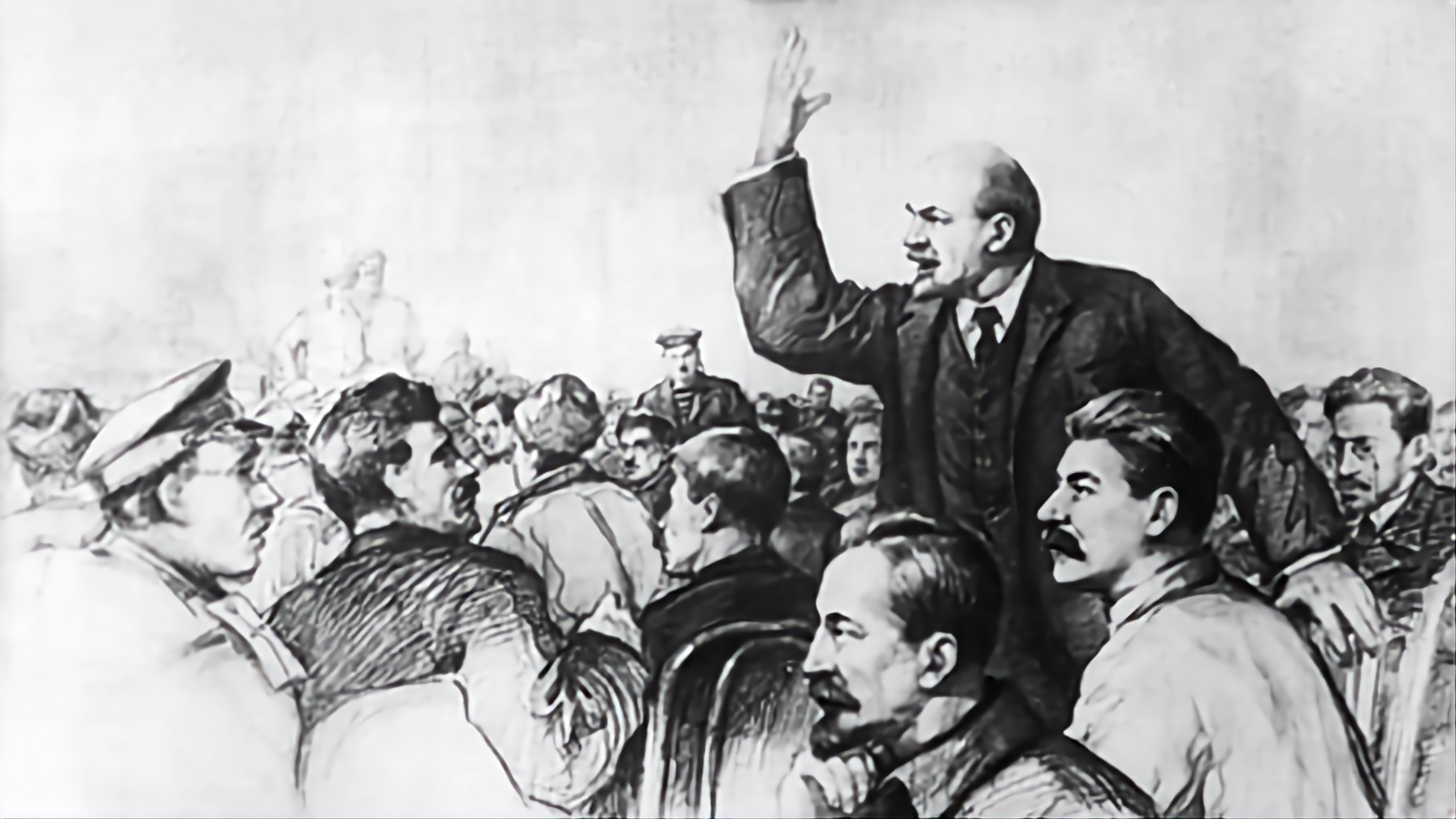

45·6 个月前go in expecting a deconstruction of red scare propaganda from a modern lens

leave with more anticommunist slop

10·7 个月前

10·7 个月前to be honest i was under the impression that he was based entirely because of his name and nothing else

3·7 个月前

3·7 个月前From my understanding, misalignment is just a shorthand for something going wrong between what action is intended and what action is taken, and that seems to be a perfectly serviceable word to have. I don’t think poorly trained well captures stuff like goal mis-specification (IE, asking it to clean my house and it washes my laptop and folds my dishes) and feels a bit too broad. Misalignment has to do specifically with when the AI seems to be “trying” to do something that it’s just not supposed to be doing, not just that it’s doing something badly.

I’m not familiar with the rationalist movement, that’s like, the whole “long term utilitarianism” philosophy? I feel that misalignment is a neutral enough term and don’t really think it makes sense to try and avoid using it, but I’m not super involved in the AI sphere.

1·7 个月前

1·7 个月前you have to touch it with your body

1·7 个月前

1·7 个月前i’d like to clarify, the stick can’t be broken but that’s not because of it being especially durable. it’s a regular stick, but any time that it’s in a situation where it would be broken, something happens to prevent it conveniently.

2·7 个月前

2·7 个月前you can schedule to get into bed by the time it happens. it’s part of the curse.

3·7 个月前

3·7 个月前you can adjust how long you sleep but only within a range of 7 - 9 hours. after 9 hours you’re forced awake and you physically can’t wake up until you’ve slept at least 7 hours.

why use ai to make porn of made up people when i can just visualize shadow the hedgehog getting pegged in my minds eye